Aug 3, 2012 , by

Public Summary Month 7/2012

TASK 3 + TASK 4:

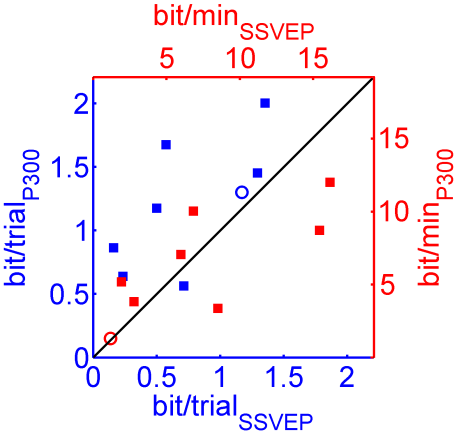

We finished the verification of object selection paradigms for grasp initiation. The P300 and SSVEP paradigms differ in the number of alternatives as well as trial durations, but reveal comparable detection rates and information transfer rates (bit/min, see Figure 1). We prefer the P300 over the SSVEP paradigm for object selection for three reasons: the feasibility of gaze independence, the flexibility regarding the number of selectable objects and the more convenient task experience of subjects.

Figure 1: Direct comparison of bit-rates between SSVEP as well as the P300 experiment (each square one subject). Blue data points are scaling in bit/trial, red data points in bit/min. Circles represent averages over subjects.

TASK 9:

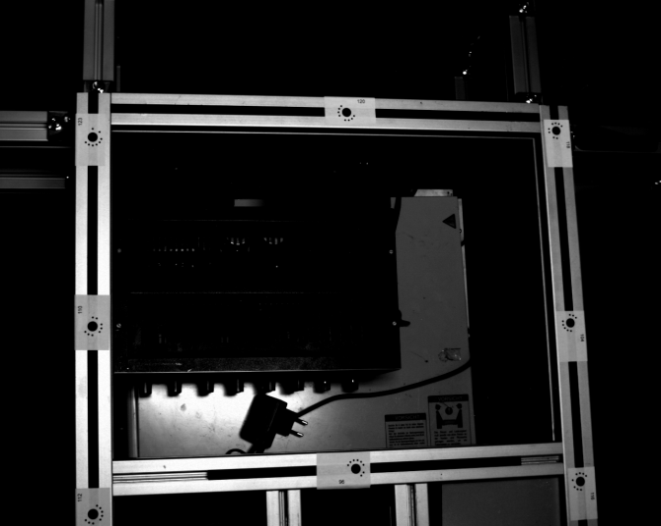

After calibration, matching pixels in the left and right camera pictures can be used to calculate their spatial depth. To this end, all resulting 3D points are defined in the camera coordinate system. The transformation to robot coordinates is calculated by using calibration markers in the robot framework. Furthermore, we finished the image-based segmentation algorithm.

Figure 3: Markers on the robot framework

Figure 4: Recognized grasp targets

In order to deal with artifacts, we added an algorithm to analyze the neighborhood of each 3D-point. Figure 5 shows the result of the algorithm.

Figure 5: Overlay of original and artifact-cleansed scene. Orange points are identified as artifacts and deleted.

TASK 10:

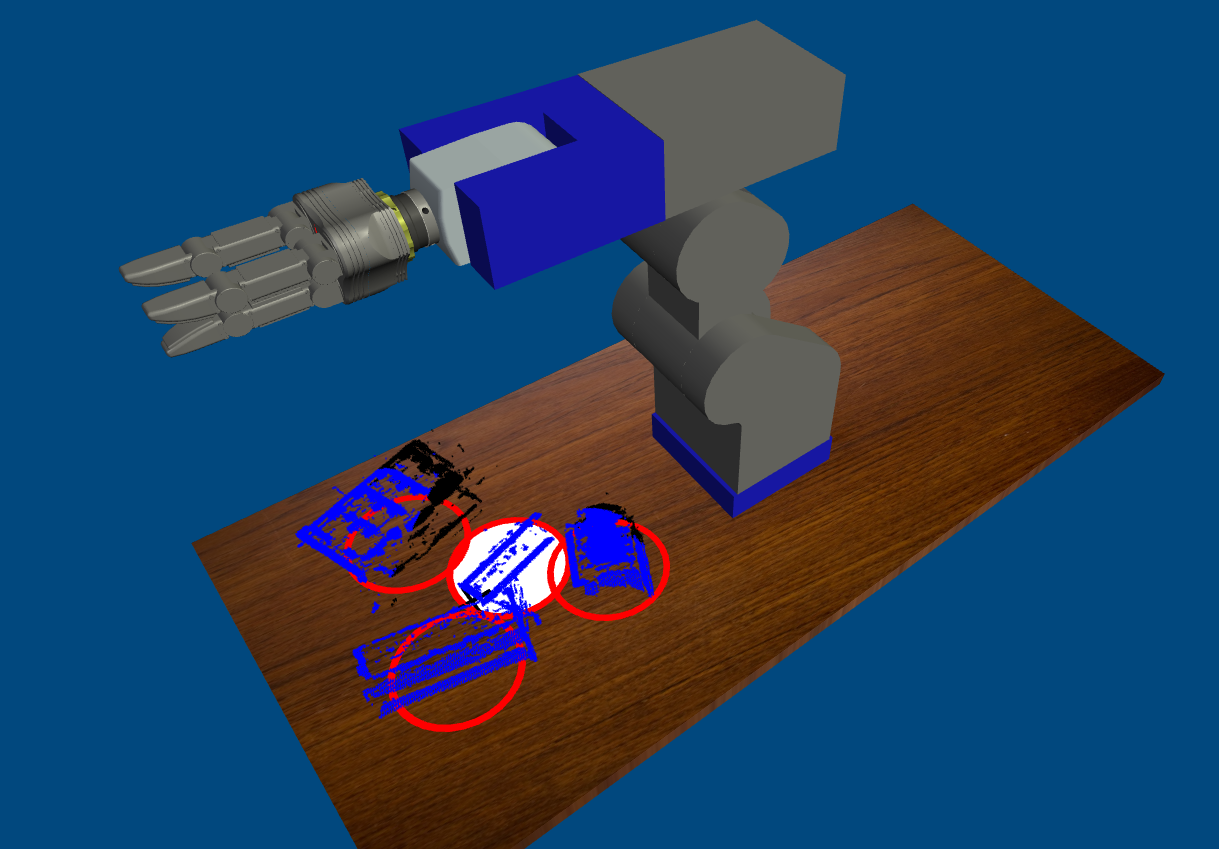

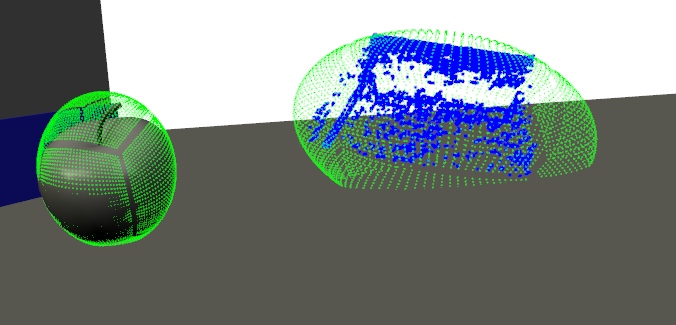

We realized a need to fine-tune our strategy to deal with huge holes in the grasp targets resulting from object recognition. Our current strategy is to put the point-poles used for the force-based grasp planning on a bounding ellipsoid containing the object (see Figure 6).

Figure 6: Distributed point-charges for force-based grasp planning of 3D-recognized objects