Feb 29, 2012 , by

Public Summary Month 2/2012

We have built two example collection of commands to model the two application domains. The sentences have been collected from the students of the class “Seminars AI and Robotics”, section on Natural Language Processing. The goal was experimenting the process of acquiring domain specific information, and the collections represent a first characterization of domain specific knowledge, in terms of robot capabilities, environments and tasks, not the final resources for language generation. We have combined the collection of the lexical units defined in the RoboFrameNet environment (available in Ros), with the more general representation provided by Wordnet and defined a first characterization of the possible wordings of the commands.

We have defined a methodology for testing the performance of our system. The baseline will be a system where a set of sentences from a corpus is associated with a set of commands. The performance of the customized system will be compared through systematic experiments with the baseline, on a set of vocal data recorded from different users. A dedicated tool has been built in order to support the systematic evaluation of the system performance.

The first technical report provides a comprehensive report of the first activities carried out in the project.

Dec 29, 2011 , by

Public Summary Month 12/2011

Experiment started in November still pending the signature of the contract.

Activities have focussed on the analysis and initial steps in the design. Speciifcally: Robotic Domain Definition and Representation and Robotic Voice Development Kit Design

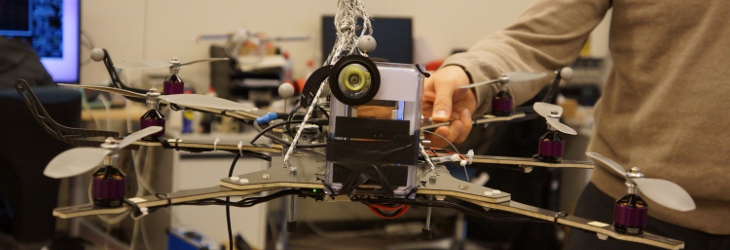

The main steps accomplished in task 1 are the implementation of a prototype to interact using Speaky both with the NAO humanoid robot by Aldebaran and with a small wheeled Erratic robot by Videre Design. The goal is to acquire some practical experience on the possible wordings used to command the robot. In the implementation of the above mentioned prototypes we have provided a first characterization of the robot capabilities, both for a humanoid robot and for a wheeled robot.

The main steps accomplished in task 2 consist of a first architectural design in the implementation of the above described prototype, which is specifically concerned with the communication between Speaky and the mobile platform.We have also identified the internal structure of the speaky devolpment environment and identified two key components: the dynamic grammar manager and the the dialogue manager. The first one is instrumental to provide suitable data for a linguistic post-processing of the output of the speech recognition and the second one needs to integrate the state of the dialogue with the state of the robot.