Sep 4, 2012 , by

Public Summary Month 7/2012

Results from the previous tasks were transferred to the scenario of our industrial partner MEA that employs an industrial robot to solve a real-world bin-picking task. A stereoscopic laser scanner obtains depth images of filled transport boxes. The cylindrical objects relevant for the application are detected in all orientations and under various occlusions. Path planning is simplified by restricting robot movement inside the box to be always straight from top, which is made possible by an additional seventh axis that ends in a magnetic gripper.

May 31, 2012 , by

Public Summary Month 5/2012

We conducted experiments where the robot has to actively perceive partially occluded objects which showed that considering object detection results when planning the next view can help finding more objects.

We integrated the components developed in ActReMa into a bin-picking application, performed by the cognitive service robot Cosero at UBO. The robot navigates to the transport box, aligns to it, acquires 3D scans, recognizes objects, plans grasps, executes the grasping, navigates to a processing station, and places the object there.

Mar 28, 2012 , by

Public Summary Month 3/2012

For learning object models, we made initial scan alignment more robust by adapting the point-pair feature object detection and pose estimation method of Papazov et al. (ACCV 2010). We only allow transformations that are close to the expected ones in the RANSAC step.

For active object perception, we extended the simulation to include the complete experiment setup, adapted the object recognition method to identify regions of interest, and integrated the planning of the next best view (NBV).

Jan 27, 2012 , by

Public Summary Month 1/2012

For learning object models, a board that allows for scanning of multiple object instances in different orientations was designed. The individual object views are segmented and registered. In the resulting point cloud, geometric primitives are detected.

For active perception, sensor poses are sampled above the detected transport box. Their utility is evaluated according to the amount of unknown volume and the points not explained by detected primitives. The pose with the highest utility is chosen to acquire a new range scan.

Nov 27, 2011 , by

Public Summary Month 11/2011

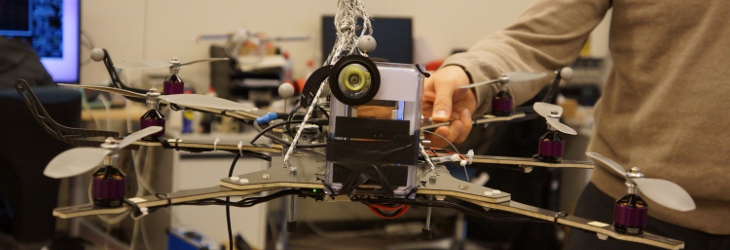

The Metronom 3D sensor has been mounted on the mobile robot Dynamaid. Its measurements are more precise and less noisy than Kinect measurements.

The experiment partners started work on the learning of object models from examples and active object recognition.

For the learning of object models, scans from different views are registered and unconstrained detection of geometric primitives is performed (Fig. 1).

For active object recognition, we also registered depth measurements from different views (Fig. 2). This reduces occlusion effects and facilitates recognition.